2023年6月21日发(作者:)

vision_transformer实战总结:⾮常简单的VIT⼊门教程,⼀定不要错过⽂章⽬录摘要本例提取了植物幼苗数据集中的部分数据做数据集,数据集共有12种类别,演⽰如何使⽤pytorch版本的VIT图像分类模型实现分类任务。通过本⽂你和学到:1、如何构建VIT模型?2、如何⽣成数据集?3、如何使⽤Cutout数据增强?4、如何使⽤Mixup数据增强。5、如何实现训练和验证。6、如何使⽤余弦退⽕调整学习率?7、预测的两种写法。这篇⽂章的代码没有做过多的修饰,⽐较简单,容易理解。项⽬结构VIT_demo├─models│ └─vision_├─data│ ├─Black-grass│ ├─Charlock│ ├─Cleavers│ ├─Common Chickweed│ ├─Common wheat│ ├─Fat Hen│ ├─Loose Silky-bent│ ├─Maize│ ├─Scentless Mayweed│ ├─Shepherds Purse│ ├─Small-flowered Cranesbill│ └─Sugar beet├─mean_├─├─├─└─_:计算mean和std的值。:⽣成数据集。计算mean和std为了使模型更加快速的收敛,我们需要计算出mean和std的值,新建mean_,插⼊代码:from ts import ImageFolderimport torchfrom torchvision import transformsdef get_mean_and_std(train_data): train_loader = ader( train_data, batch_size=1, shuffle=False, num_workers=0, pin_memory=True) mean = (3) std = (3) for X, _ in train_loader: for d in range(3): mean[d] += X[:, d, :, :].mean() std[d] += X[:, d, :, :].std() _(len(train_data)) _(len(train_data)) return list(()), list(())if __name__ == '__main__': train_dataset = ImageFolder(root=r'data1', transform=or()) print(get_mean_and_std(train_dataset))数据集结构:运⾏结果:([0.3281186, 0.28937867, 0.20702125], [0.09407319, 0.09732835, 0.106712654])把这个结果记录下来,后⾯要⽤!⽣成数据集我们整理还的图像分类的数据集结构是这样的data├─Black-grass├─Charlock├─Cleavers├─Common Chickweed├─Common wheat├─Fat Hen├─Loose Silky-bent├─Maize├─Scentless Mayweed├─Shepherds Purse├─Small-flowered Cranesbill└─Sugar beetpytorch和keras默认加载⽅式是ImageNet数据集格式,格式是├─data│ ├─val│ │ ├─Black-grass│ │ ├─Charlock│ │ ├─Cleavers│ │ ├─Common Chickweed│ │ ├─Common wheat│ │ ├─Fat Hen│ │ ├─Loose Silky-bent│ │ ├─Maize│ │ ├─Scentless Mayweed│ │ ├─Shepherds Purse│ │ ├─Small-flowered Cranesbill│ │ └─Sugar beet│ └─train│ ├─Black-grass│ ├─Charlock│ ├─Cleavers│ ├─Common Chickweed│ ├─Common wheat│ ├─Fat Hen│ ├─Loose Silky-bent│ ├─Maize│ ├─Scentless Mayweed│ ├─Shepherds Purse│ ├─Small-flowered Cranesbill│ └─Sugar beet新增格式转化脚本,插⼊代码:import globimport osimport shutilimage_list=('data1/*/*.png')print(image_list)file_dir='data'if (file_dir): print('true') #(file_dir) (file_dir)#删除再建⽴ rs(file_dir)else: rs(file_dir)from _selection import train_test_splittrainval_files, val_files = train_test_split(image_list, test_size=0.3, random_state=42)train_dir='train'val_dir='val'train_root=(file_dir,train_dir)val_root=(file_dir,val_dir)for file in trainval_files: file_class=e("","/").split('/')[-2] file_name=e("","/").split('/')[-1] file_class=(train_root,file_class) if not (file_class): rs(file_class) (file, file_class + '/' + file_name)for file in val_files: file_class=e("","/").split('/')[-2] file_name=e("","/").split('/')[-1] file_class=(val_root,file_class) if not (file_class): rs(file_class) (file, file_class + '/' + file_name)数据增强Cutout和Mixup为了提⾼成绩我在代码中加⼊Cutout和Mixup这两种增强⽅式。实现这两种增强需要安装torchtoolbox。安装命令:pip install torchtoolboxCutout实现,在transforms中。from orm import Cutout#

数据预处理transform = e([ ((224, 224)), Cutout()])Mixup实现,在train⽅法中。需要导⼊包:from import mixup_data, mixup_criterion for batch_idx, (data, target) in enumerate(train_loader): data, target = (device, non_blocking=True), (device, non_blocking=True) data, labels_a, labels_b, lam = mixup_data(data, target, alpha) _grad() output = model(data) loss = mixup_criterion(criterion, output, labels_a, labels_b, lam) rd() () print_loss = ()导⼊项⽬使⽤的库import as optimimport torchimport as nnimport elimport port butedimport orms as transformsimport ts as datasetsfrom _transformer import deit_tiny_patch16_224from import mixup_data, mixup_criterionfrom orm import Cutout设置全局参数设置学习率、BatchSize、epoch等参数,判断环境中是否存在GPU,如果没有则使⽤CPU。建议使⽤GPU,CPU太慢了。#

设置全局参数modellr = 1e-4BATCH_SIZE = 16EPOCHS = 300DEVICE = ('cuda' if _available() else 'cpu')图像预处理与增强数据处理⽐较简单,加⼊了Cutout、做了Resize和归⼀化。在ize中写⼊上⾯求得的mean和std的值。#

数据预处理transform = e([ ((224, 224)), Cutout(), or(), ize([0.3281186, 0.28937867, 0.20702125], [0.09407319, 0.09732835, 0.106712654])])transform_test = e([ ((224, 224)), or(), ize([0.3281186, 0.28937867, 0.20702125], [0.09407319, 0.09732835, 0.106712654])])读取数据使⽤pytorch默认读取数据的⽅式,然后将dataset__to_idx打印出来,预测的时候要⽤到。#

读取数据dataset_train = older('data/train', transform=transform)dataset_test = older("data/val", transform=transform_test)print(dataset__to_idx)#

导⼊数据train_loader = ader(dataset_train, batch_size=BATCH_SIZE, shuffle=True)test_loader = ader(dataset_test, batch_size=BATCH_SIZE, shuffle=False)class_to_idx的结果:{‘Black-grass’: 0, ‘Charlock’: 1, ‘Cleavers’: 2, ‘Common Chickweed’: 3, ‘Common wheat’: 4, ‘Fat Hen’: 5,‘Loose Silky-bent’: 6, ‘Maize’: 7, ‘Scentless Mayweed’: 8, ‘Shepherds Purse’: 9, ‘Small-flowered Cranesbill’: 10,‘Sugar beet’: 11}设置模型设置loss函数为ntropyLoss()。设置模型为deit_tiny_patch16_224,预训练设置为true,num_classes设置为12。优化器设置为adam。学习率调整策略选择为余弦退⽕。我在这个脚本的基础上做了更改,⽬前可以加载.pth的预训练模型,不能加载.npz的预训练模型。#

实例化模型并且移动到GPUcriterion = ntropyLoss()model_ft = deit_tiny_patch16_224(pretrained=True)print(model_ft)num_ftrs = model__featuresmodel_ = (num_ftrs, 12,bias=True)_uniform_(model_)model_(DEVICE)print(model_ft)#

选择简单暴⼒的Adam优化器,学习率调低optimizer = (model_ters(), lr=modellr)cosine_schedule = _AnnealingLR(optimizer=optimizer,T_max=20,eta_min=1e-9)定义训练和验证函数#

定义训练过程alpha=0.2def train(model, device, train_loader, optimizer, epoch): () sum_loss = 0 total_num = len(train_t) print(total_num, len(train_loader)) for batch_idx, (data, target) in enumerate(train_loader): data, target = (device, non_blocking=True), (device, non_blocking=True) data, labels_a, labels_b, lam = mixup_data(data, target, alpha) _grad() output = model(data) loss = mixup_criterion(criterion, output, labels_a, labels_b, lam) rd() () lr = _dict()['param_groups'][0]['lr'] print_loss = () sum_loss += print_loss if (batch_idx + 1) % 10 == 0: print('Train Epoch: {} [{}/{} ({:.0f}%)]tLoss: {:.6f}tLR:{:.9f}'.format( epoch, (batch_idx + 1) * len(data), len(train_t), 100. * (batch_idx + 1) / len(train_loader), (),lr)) ave_loss = sum_loss / len(train_loader) print('epoch:{},loss:{}'.format(epoch, ave_loss))ACC=0#

验证过程def val(model, device, test_loader): global ACC () test_loss = 0 correct = 0 total_num = len(test_t) print(total_num, len(test_loader)) with _grad(): for data, target in test_loader: data, target = Variable(data).to(device), Variable(target).to(device) output = model(data) loss = criterion(output, target) _, pred = (, 1) correct += (pred == target) print_loss = () test_loss += print_loss correct = () acc = correct / total_num avgloss = test_loss / len(test_loader) print('nVal set: Average loss: {:.4f}, Accuracy: {}/{} ({:.0f}%)n'.format( avgloss, correct, len(test_t), 100 * acc)) if acc > ACC: (model_ft, 'model_' + str(epoch) + '_' + str(round(acc, 3)) + '.pth') ACC = acc#

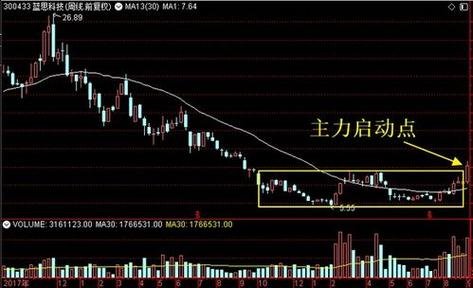

训练for epoch in range(1, EPOCHS + 1): train(model_ft, DEVICE, train_loader, optimizer, epoch) cosine_() val(model_ft, DEVICE, test_loader)运⾏结果:测试我们介绍⼀种通⽤的,通过⾃⼰⼿动加载数据集然后做预测,具体操作如下:测试集存放的⽬录如下图:第⼀步 定义类别,这个类别的顺序和训练时的类别顺序对应,⼀定不要改变顺序第⼆步 定义transforms,transforms和验证集的transforms⼀样即可,别做数据增强。第三步 加载model,并将模型放在DEVICE⾥,第四步 读取图⽚并预测图⽚的类别,在这⾥注意,读取图⽚⽤PIL库的Image。不要⽤cv2,transforms不⽀持。import butedimport orms as transformsfrom PIL import Imagefrom ad import Variableimport osclasses = ('Black-grass', 'Charlock', 'Cleavers', 'Common Chickweed', 'Common wheat','Fat Hen', 'Loose Silky-bent', 'Maize','Scentless Mayweed','Shepherds Purse','Small-flowered Cranesbill','Sugar beet')transform_test = e([ ((224, 224)), or(), ize([0.3281186, 0.28937867, 0.20702125], [0.09407319, 0.09732835, 0.106712654])])

DEVICE = ("cuda:0" if _available() else "cpu")model = ("")()(DEVICE)

path='test/'testList=r(path)for file in testList: img=(path+file) img=transform_test(img) eze_(0) img = Variable(img).to(DEVICE) out=model(img) # Predict _, pred = (, 1) print('Image Name:{},predict:{}'.format(file,classes[()]))运⾏结果:

2023年6月21日发(作者:)

vision_transformer实战总结:⾮常简单的VIT⼊门教程,⼀定不要错过⽂章⽬录摘要本例提取了植物幼苗数据集中的部分数据做数据集,数据集共有12种类别,演⽰如何使⽤pytorch版本的VIT图像分类模型实现分类任务。通过本⽂你和学到:1、如何构建VIT模型?2、如何⽣成数据集?3、如何使⽤Cutout数据增强?4、如何使⽤Mixup数据增强。5、如何实现训练和验证。6、如何使⽤余弦退⽕调整学习率?7、预测的两种写法。这篇⽂章的代码没有做过多的修饰,⽐较简单,容易理解。项⽬结构VIT_demo├─models│ └─vision_├─data│ ├─Black-grass│ ├─Charlock│ ├─Cleavers│ ├─Common Chickweed│ ├─Common wheat│ ├─Fat Hen│ ├─Loose Silky-bent│ ├─Maize│ ├─Scentless Mayweed│ ├─Shepherds Purse│ ├─Small-flowered Cranesbill│ └─Sugar beet├─mean_├─├─├─└─_:计算mean和std的值。:⽣成数据集。计算mean和std为了使模型更加快速的收敛,我们需要计算出mean和std的值,新建mean_,插⼊代码:from ts import ImageFolderimport torchfrom torchvision import transformsdef get_mean_and_std(train_data): train_loader = ader( train_data, batch_size=1, shuffle=False, num_workers=0, pin_memory=True) mean = (3) std = (3) for X, _ in train_loader: for d in range(3): mean[d] += X[:, d, :, :].mean() std[d] += X[:, d, :, :].std() _(len(train_data)) _(len(train_data)) return list(()), list(())if __name__ == '__main__': train_dataset = ImageFolder(root=r'data1', transform=or()) print(get_mean_and_std(train_dataset))数据集结构:运⾏结果:([0.3281186, 0.28937867, 0.20702125], [0.09407319, 0.09732835, 0.106712654])把这个结果记录下来,后⾯要⽤!⽣成数据集我们整理还的图像分类的数据集结构是这样的data├─Black-grass├─Charlock├─Cleavers├─Common Chickweed├─Common wheat├─Fat Hen├─Loose Silky-bent├─Maize├─Scentless Mayweed├─Shepherds Purse├─Small-flowered Cranesbill└─Sugar beetpytorch和keras默认加载⽅式是ImageNet数据集格式,格式是├─data│ ├─val│ │ ├─Black-grass│ │ ├─Charlock│ │ ├─Cleavers│ │ ├─Common Chickweed│ │ ├─Common wheat│ │ ├─Fat Hen│ │ ├─Loose Silky-bent│ │ ├─Maize│ │ ├─Scentless Mayweed│ │ ├─Shepherds Purse│ │ ├─Small-flowered Cranesbill│ │ └─Sugar beet│ └─train│ ├─Black-grass│ ├─Charlock│ ├─Cleavers│ ├─Common Chickweed│ ├─Common wheat│ ├─Fat Hen│ ├─Loose Silky-bent│ ├─Maize│ ├─Scentless Mayweed│ ├─Shepherds Purse│ ├─Small-flowered Cranesbill│ └─Sugar beet新增格式转化脚本,插⼊代码:import globimport osimport shutilimage_list=('data1/*/*.png')print(image_list)file_dir='data'if (file_dir): print('true') #(file_dir) (file_dir)#删除再建⽴ rs(file_dir)else: rs(file_dir)from _selection import train_test_splittrainval_files, val_files = train_test_split(image_list, test_size=0.3, random_state=42)train_dir='train'val_dir='val'train_root=(file_dir,train_dir)val_root=(file_dir,val_dir)for file in trainval_files: file_class=e("","/").split('/')[-2] file_name=e("","/").split('/')[-1] file_class=(train_root,file_class) if not (file_class): rs(file_class) (file, file_class + '/' + file_name)for file in val_files: file_class=e("","/").split('/')[-2] file_name=e("","/").split('/')[-1] file_class=(val_root,file_class) if not (file_class): rs(file_class) (file, file_class + '/' + file_name)数据增强Cutout和Mixup为了提⾼成绩我在代码中加⼊Cutout和Mixup这两种增强⽅式。实现这两种增强需要安装torchtoolbox。安装命令:pip install torchtoolboxCutout实现,在transforms中。from orm import Cutout#

数据预处理transform = e([ ((224, 224)), Cutout()])Mixup实现,在train⽅法中。需要导⼊包:from import mixup_data, mixup_criterion for batch_idx, (data, target) in enumerate(train_loader): data, target = (device, non_blocking=True), (device, non_blocking=True) data, labels_a, labels_b, lam = mixup_data(data, target, alpha) _grad() output = model(data) loss = mixup_criterion(criterion, output, labels_a, labels_b, lam) rd() () print_loss = ()导⼊项⽬使⽤的库import as optimimport torchimport as nnimport elimport port butedimport orms as transformsimport ts as datasetsfrom _transformer import deit_tiny_patch16_224from import mixup_data, mixup_criterionfrom orm import Cutout设置全局参数设置学习率、BatchSize、epoch等参数,判断环境中是否存在GPU,如果没有则使⽤CPU。建议使⽤GPU,CPU太慢了。#

设置全局参数modellr = 1e-4BATCH_SIZE = 16EPOCHS = 300DEVICE = ('cuda' if _available() else 'cpu')图像预处理与增强数据处理⽐较简单,加⼊了Cutout、做了Resize和归⼀化。在ize中写⼊上⾯求得的mean和std的值。#

数据预处理transform = e([ ((224, 224)), Cutout(), or(), ize([0.3281186, 0.28937867, 0.20702125], [0.09407319, 0.09732835, 0.106712654])])transform_test = e([ ((224, 224)), or(), ize([0.3281186, 0.28937867, 0.20702125], [0.09407319, 0.09732835, 0.106712654])])读取数据使⽤pytorch默认读取数据的⽅式,然后将dataset__to_idx打印出来,预测的时候要⽤到。#

读取数据dataset_train = older('data/train', transform=transform)dataset_test = older("data/val", transform=transform_test)print(dataset__to_idx)#

导⼊数据train_loader = ader(dataset_train, batch_size=BATCH_SIZE, shuffle=True)test_loader = ader(dataset_test, batch_size=BATCH_SIZE, shuffle=False)class_to_idx的结果:{‘Black-grass’: 0, ‘Charlock’: 1, ‘Cleavers’: 2, ‘Common Chickweed’: 3, ‘Common wheat’: 4, ‘Fat Hen’: 5,‘Loose Silky-bent’: 6, ‘Maize’: 7, ‘Scentless Mayweed’: 8, ‘Shepherds Purse’: 9, ‘Small-flowered Cranesbill’: 10,‘Sugar beet’: 11}设置模型设置loss函数为ntropyLoss()。设置模型为deit_tiny_patch16_224,预训练设置为true,num_classes设置为12。优化器设置为adam。学习率调整策略选择为余弦退⽕。我在这个脚本的基础上做了更改,⽬前可以加载.pth的预训练模型,不能加载.npz的预训练模型。#

实例化模型并且移动到GPUcriterion = ntropyLoss()model_ft = deit_tiny_patch16_224(pretrained=True)print(model_ft)num_ftrs = model__featuresmodel_ = (num_ftrs, 12,bias=True)_uniform_(model_)model_(DEVICE)print(model_ft)#

选择简单暴⼒的Adam优化器,学习率调低optimizer = (model_ters(), lr=modellr)cosine_schedule = _AnnealingLR(optimizer=optimizer,T_max=20,eta_min=1e-9)定义训练和验证函数#

定义训练过程alpha=0.2def train(model, device, train_loader, optimizer, epoch): () sum_loss = 0 total_num = len(train_t) print(total_num, len(train_loader)) for batch_idx, (data, target) in enumerate(train_loader): data, target = (device, non_blocking=True), (device, non_blocking=True) data, labels_a, labels_b, lam = mixup_data(data, target, alpha) _grad() output = model(data) loss = mixup_criterion(criterion, output, labels_a, labels_b, lam) rd() () lr = _dict()['param_groups'][0]['lr'] print_loss = () sum_loss += print_loss if (batch_idx + 1) % 10 == 0: print('Train Epoch: {} [{}/{} ({:.0f}%)]tLoss: {:.6f}tLR:{:.9f}'.format( epoch, (batch_idx + 1) * len(data), len(train_t), 100. * (batch_idx + 1) / len(train_loader), (),lr)) ave_loss = sum_loss / len(train_loader) print('epoch:{},loss:{}'.format(epoch, ave_loss))ACC=0#

验证过程def val(model, device, test_loader): global ACC () test_loss = 0 correct = 0 total_num = len(test_t) print(total_num, len(test_loader)) with _grad(): for data, target in test_loader: data, target = Variable(data).to(device), Variable(target).to(device) output = model(data) loss = criterion(output, target) _, pred = (, 1) correct += (pred == target) print_loss = () test_loss += print_loss correct = () acc = correct / total_num avgloss = test_loss / len(test_loader) print('nVal set: Average loss: {:.4f}, Accuracy: {}/{} ({:.0f}%)n'.format( avgloss, correct, len(test_t), 100 * acc)) if acc > ACC: (model_ft, 'model_' + str(epoch) + '_' + str(round(acc, 3)) + '.pth') ACC = acc#

训练for epoch in range(1, EPOCHS + 1): train(model_ft, DEVICE, train_loader, optimizer, epoch) cosine_() val(model_ft, DEVICE, test_loader)运⾏结果:测试我们介绍⼀种通⽤的,通过⾃⼰⼿动加载数据集然后做预测,具体操作如下:测试集存放的⽬录如下图:第⼀步 定义类别,这个类别的顺序和训练时的类别顺序对应,⼀定不要改变顺序第⼆步 定义transforms,transforms和验证集的transforms⼀样即可,别做数据增强。第三步 加载model,并将模型放在DEVICE⾥,第四步 读取图⽚并预测图⽚的类别,在这⾥注意,读取图⽚⽤PIL库的Image。不要⽤cv2,transforms不⽀持。import butedimport orms as transformsfrom PIL import Imagefrom ad import Variableimport osclasses = ('Black-grass', 'Charlock', 'Cleavers', 'Common Chickweed', 'Common wheat','Fat Hen', 'Loose Silky-bent', 'Maize','Scentless Mayweed','Shepherds Purse','Small-flowered Cranesbill','Sugar beet')transform_test = e([ ((224, 224)), or(), ize([0.3281186, 0.28937867, 0.20702125], [0.09407319, 0.09732835, 0.106712654])])

DEVICE = ("cuda:0" if _available() else "cpu")model = ("")()(DEVICE)

path='test/'testList=r(path)for file in testList: img=(path+file) img=transform_test(img) eze_(0) img = Variable(img).to(DEVICE) out=model(img) # Predict _, pred = (, 1) print('Image Name:{},predict:{}'.format(file,classes[()]))运⾏结果:

发布评论